Introduction to Big Data and its Ecosystem

Guillaume Eynard-Bontemps, CNES (Centre National d’Etudes Spatiales - French Space Agency)

2020-11-15

What is Big Data?

Data evolution

1 ZB

1,000,000 PB

1,000,000,000,000 GB

1,000,000,000,000,000,000,000 B

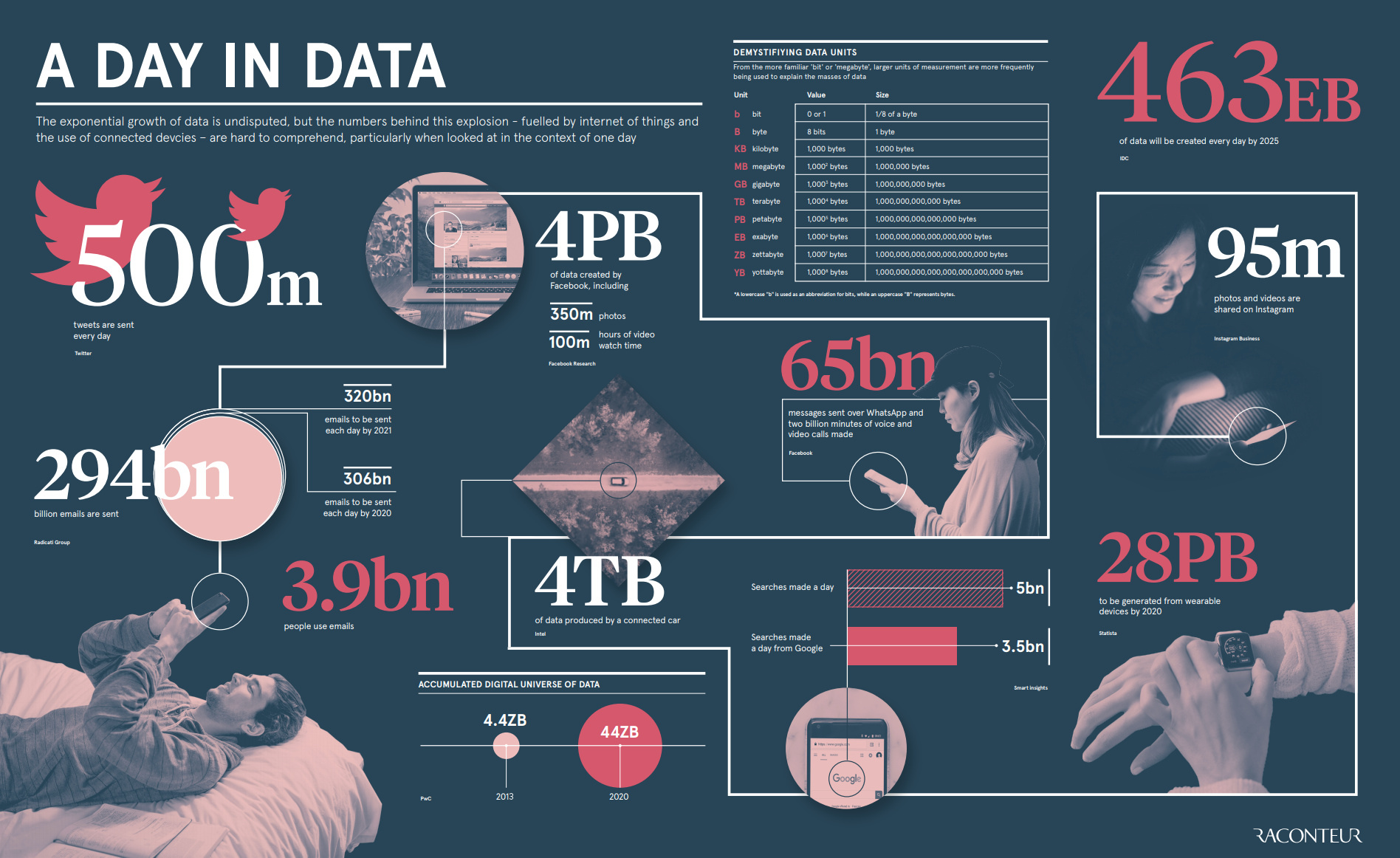

Some figures

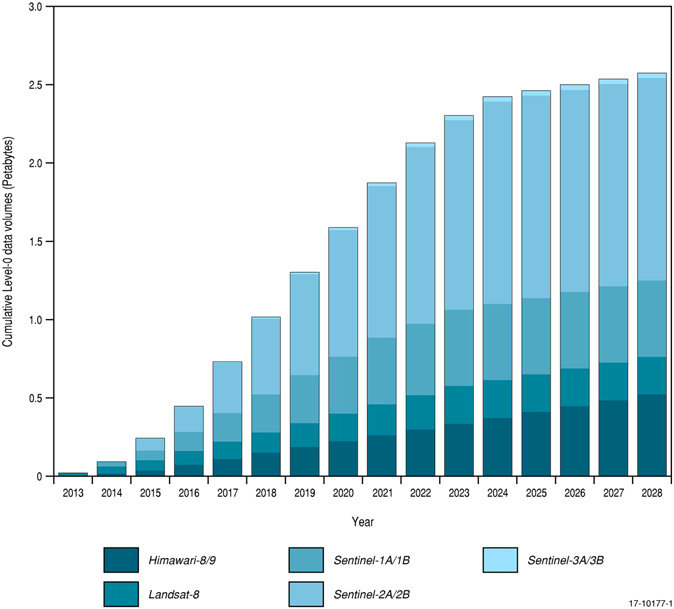

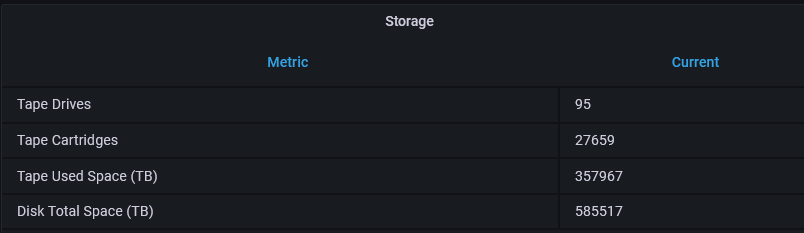

Some figures in sciences

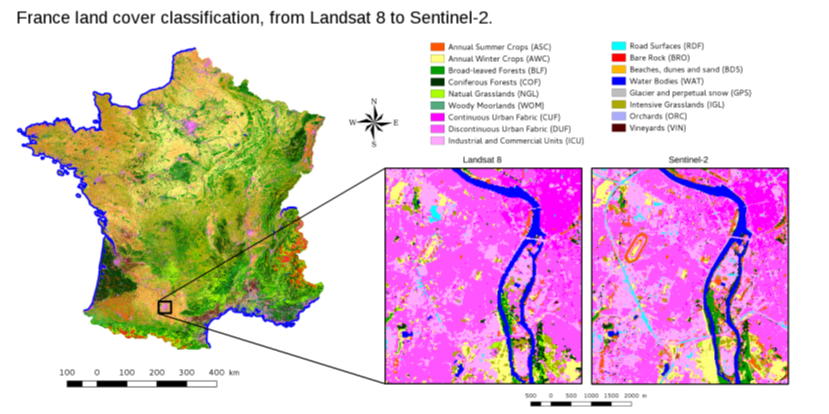

Earth Observation Data

CERN

- The LHC experiments produce about 90 petabytes of data per year

- an additional 25 petabytes of data are produced per year for data from other (non-LHC) experiments at CERN

3V, 4V, 5V

What is Behind Big Data

Data

Volume, variety, multiple sources, internal, external…

Tools and technology

Store, Compute, Analyse: Calculators, Cloud, Hadoop, Spark, Dask

Visualize, Use: Applications, Web interfaces

Definition (Wikipedia)

Big data is a field that treats ways to analyze, systematically extract information from, or otherwise deal with data sets that are too large or complex to be dealt with by traditional data-processing application software.

Big data is where parallel computing tools are needed to handle data.

Not a technology.

Quizz

What is the estimated size of the global data sphere?

- Answer A: 175 Petabytes

- Answer B: 175 Exabytes

- Answer C: 175 Zetabytes

Answer link Key: yi

Quizz

Cite some V’s of Big Data (multiple choices):

- Answer A: Validation

- Answer B: Volume

- Answer C: Velocity

- Answer D: Voldemort

- Answer E: Variety

Answer link Key: rf

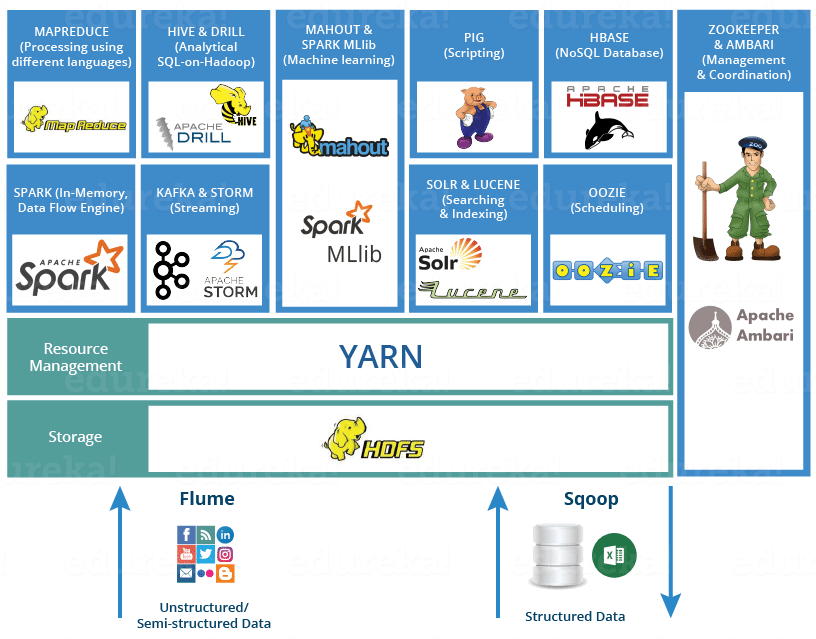

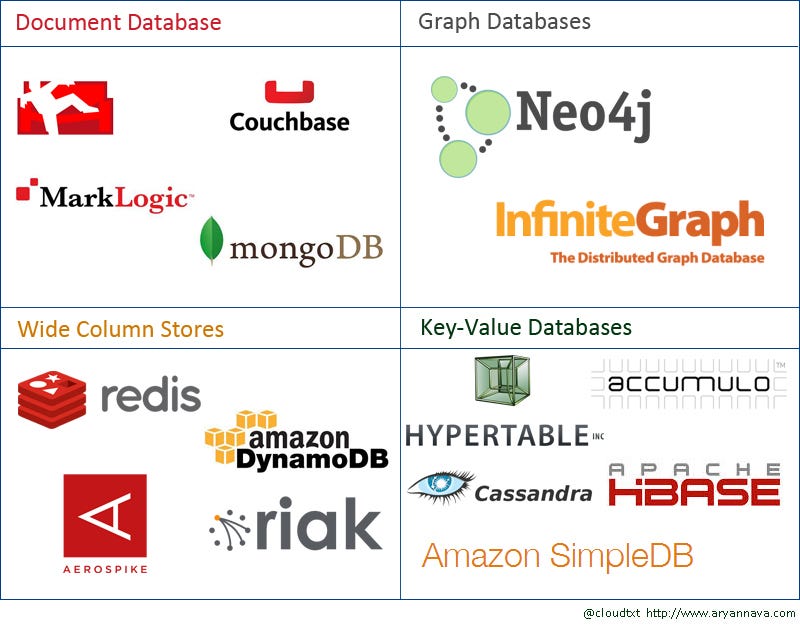

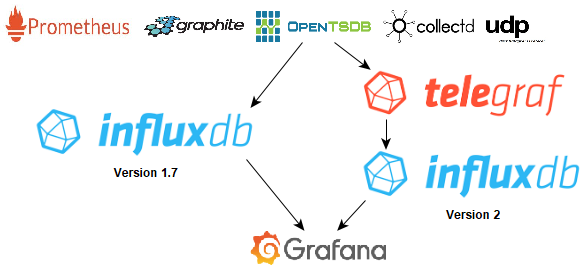

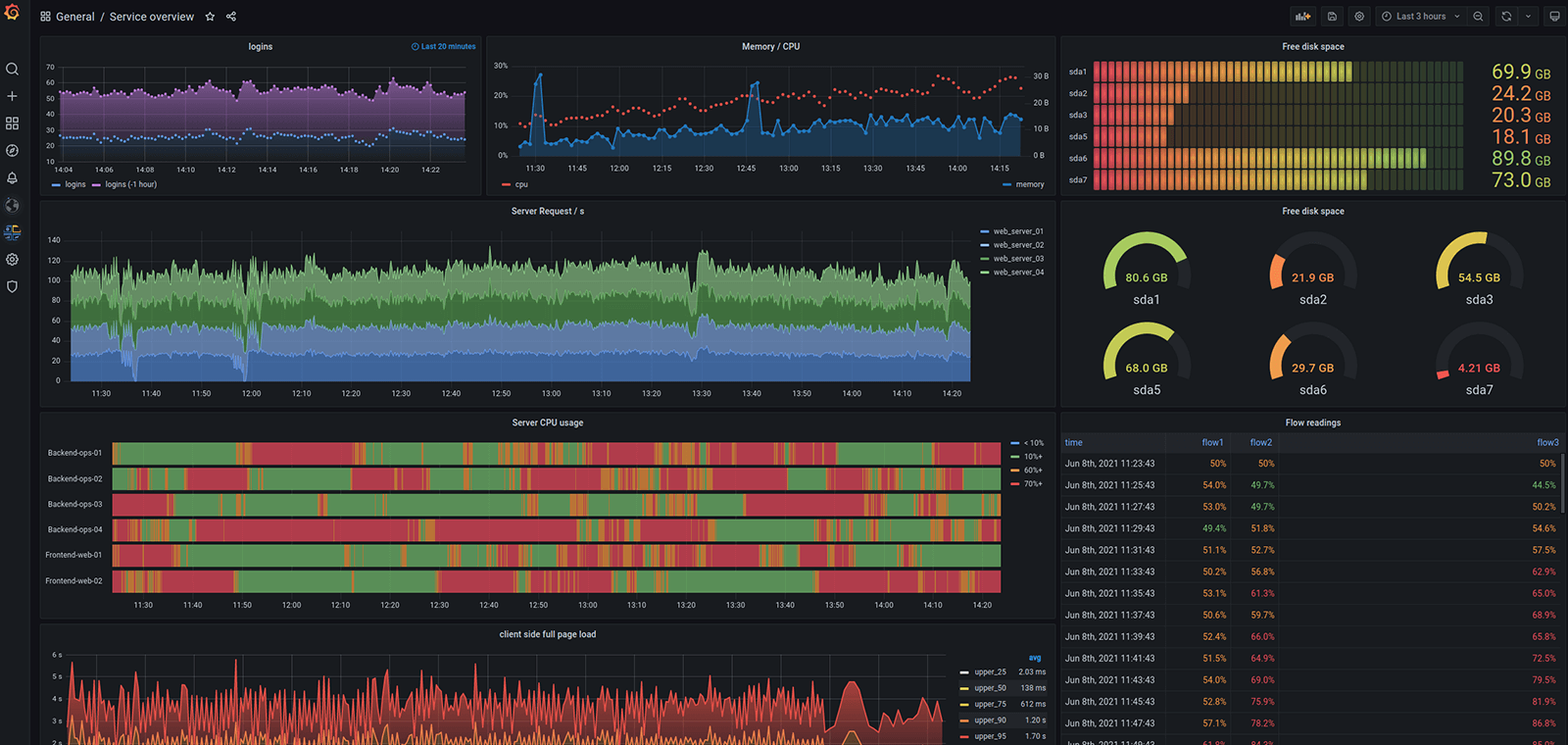

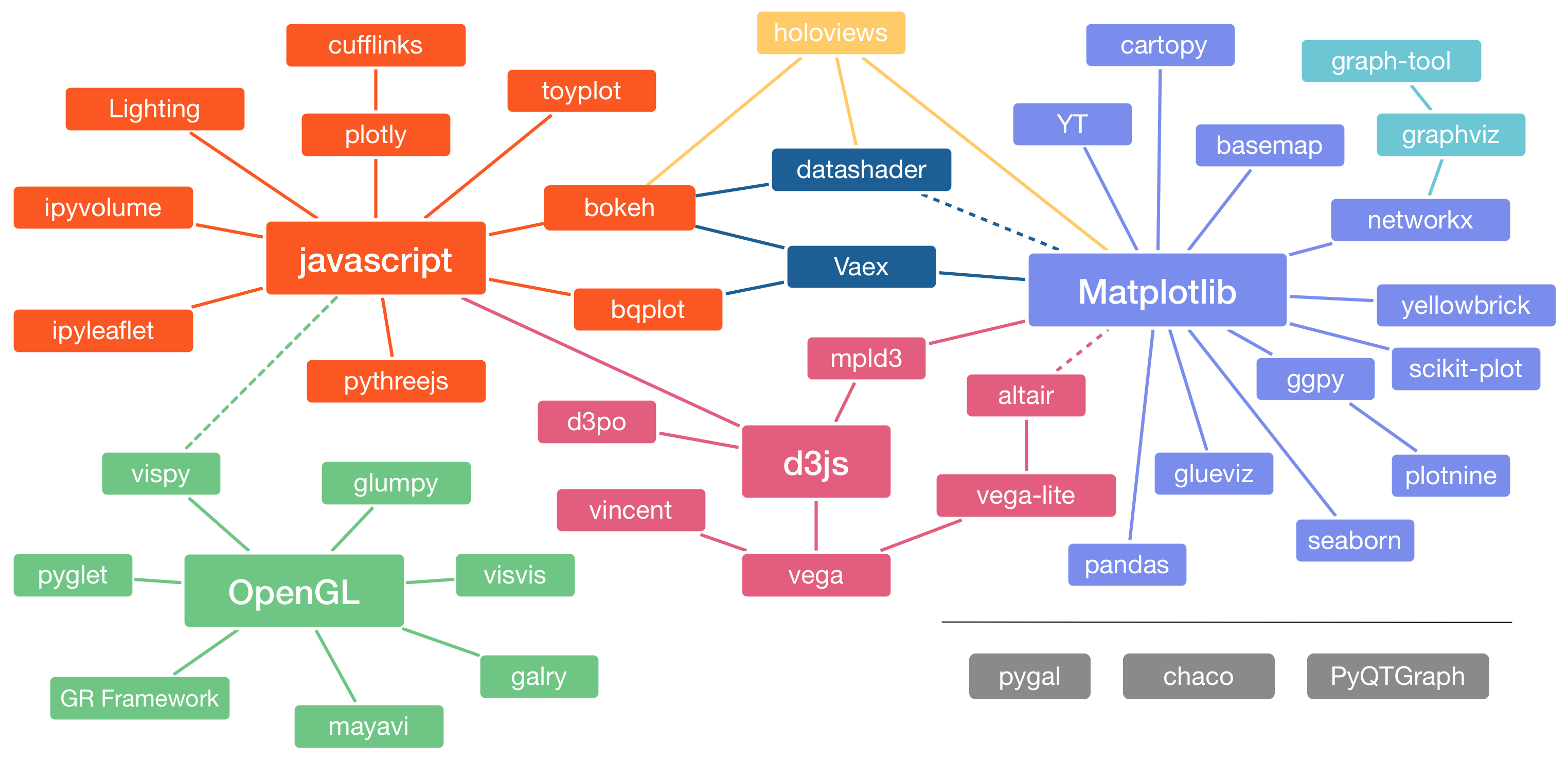

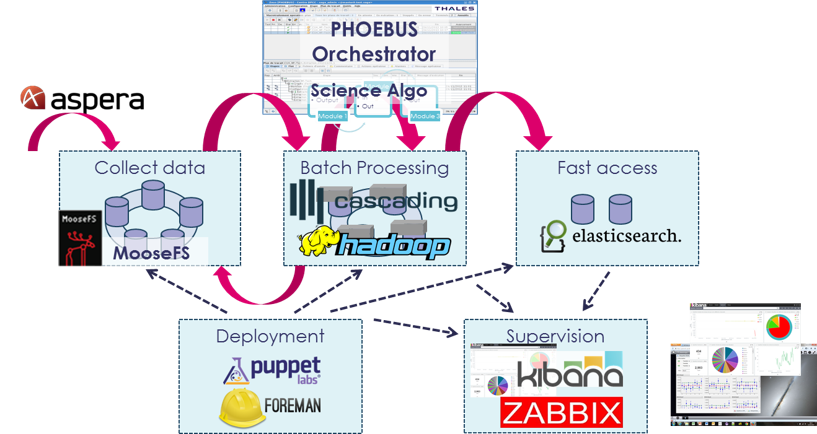

Legacy “Big Data” ecosystem

Blowing ecosystem

Hadoop & Map Reduce

NoSQL (Not only SQL)

Logs, ETL, Time series

Dataviz

BI (softwares)

Python (libraries)

Data Science and Machine Learning

Quizz

Which technology is the most representative of the Big Data world?

- Answer A: Spark

- Answer B: Elasticsearch

- Answer C: Hadoop

- Answer D: Tensorflow

- Answer E: MPI (Message Passing Interface)

Answer link Key: dy

Big Data use cases

Typical Dataset (originally)

Huge amount of small objects:

- Billions of records

- KB to MB range

Think of:

- Web pages, and words into it

- Tweets

- Text files, where each line is a record

- IoT and everyday life sensors: a record per second, minute or hour.

Cost effective storage and processing

- Commodity hardware (standard servers, disks and network)

- Horizontal scalability

- Proximity of Storage and Compute

- Secure storage (redundancy or Erasure Coding)

Use cases:

- Archiving

- Massive volume handling

- ETL (Extract Transform Load)

Data mining, data value, data cross processing

Extract new knowledge and value from the data:

- Statistics,

- Find new Key Performance Indicators,

- Explain your data with no prior knowledge (Data Mining)

Cross analysis of internal and external data, correlations:

- Trends with news or social network stream and correlation to sales

- Near real time updates with Stream processing

Scientific data processing

Data production or scientific exploration:

- Stream processing, or near real time processing from sensor data

- Distributed processing of massive volume of incomming data on computing farm

- Data exploration and analysis

- Data Science

Other main use cases

- Digital twins

- Predictive maintenance

- Smart City

- Real time processing

Quizz

What is the typical volumes of scientific Datasets (multiple choices)?

- Answer A: MBs

- Answer B: GBs

- Answer C: TBs

- Answer D: PBs

- Answer E: EBs

Answer link Key: ri

Big Data to Machine Learning

Big Data ecosystem allows (part of) machine learning to be effective

- More data = more precise models

- Deep Learning difficult without large (possibly generated) input datasets

- Tools to collect, store, filter, index, structure data

- Tools to analyse and visualize data

- Real time model learning

https://blog.dataiku.com/when-and-when-not-to-use-deep-learning

Pre processing before machine learning

- Data wrangling and exploration

- Feature engineering: unstructured data to input features

- Cross mutliple data sources

- Get insights on the data before processing it (statistics, vizualisation)

Distribute datasets and algorithms

- For preprocessing as seen above

- Means to load and learn on large volumes by distributing storage

- Distributed learning with data locality on big datasets

- Distributed hyper parameter search

Quizz

Is Big Data and Machine Learning the same?

Answer link Key: fj