Spark Introduction

Guillaume Eynard-Bontemps, CNES (Centre National d’Etudes Spatiales - French Space Agency)

2020-11-15

Spark Introduction

Spark History

- Research project at the UC Berkeley AMPLab, started in 2009

- Open sourced in early 2010:

- 2013: Moved to Apache Software Foundation

- 2014: 1.0 release, top level Apache project

- 2016: 2.0 release

- 2020: 3.0 release

- Now with 100s of developers

Spark goal and key features

From Spark Research homepage:

Our goal was to design a programming model that supports a much wider class of applications than MapReduce, while maintaining its automatic fault tolerance. In particular, MapReduce is inefficient for multi-pass applications (…).

- Iterative algorithms (many machine learning algorithms)

- Interactive data mining

- Streaming applications that maintain aggregate state over time

Spark vs Map Reduce

- MapReduce alternative which provides in memory processing (100x faster)

- A lot of other things, tools, higher level API

Tools and ecosystem

Quizz

What’s the main difference between Spark and Hadoop Map Reduce?

- Answer A: Spark uses another algorithm at the heart of its computing model

- Answer B: Spark can work on memory and is much faster

- Answer C: Spark has a better name

- Answer D: Spark provides many more APIs

Answer link Key: hg

APIs

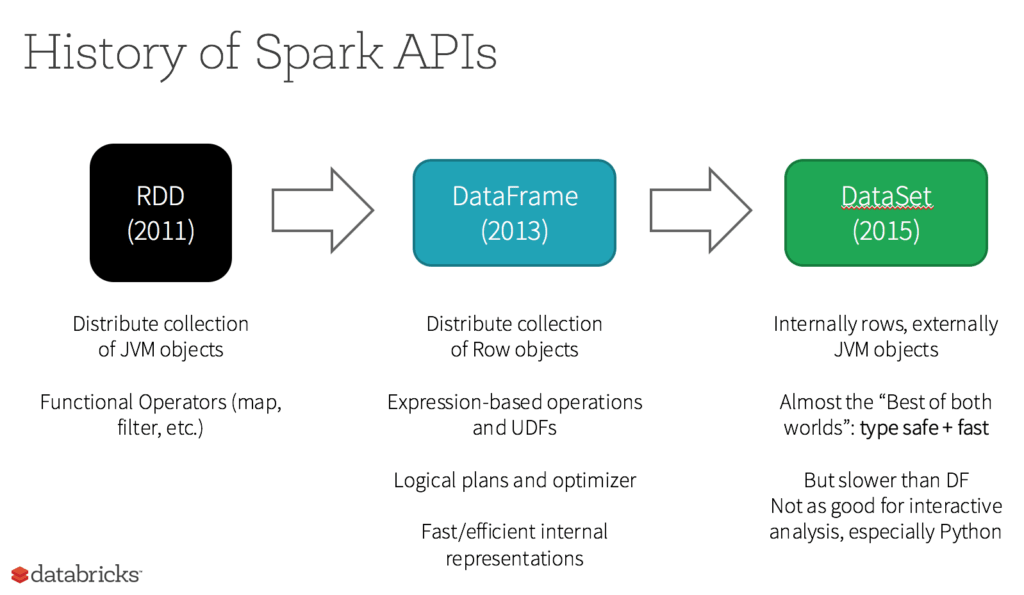

Resilient Distributed Dataset (RDD)

- Was primary user-facing API in Spark

- An RDD is an

- immutable

- distributed

- collection of elements of your data

- partitioned across nodes in your cluster

- operated in parallel with a low-level API

- Offers transformations and actions

- Unstructured datasets

- Functional programming constructs

- In memory, fault tolerant

- No schema, less optimization

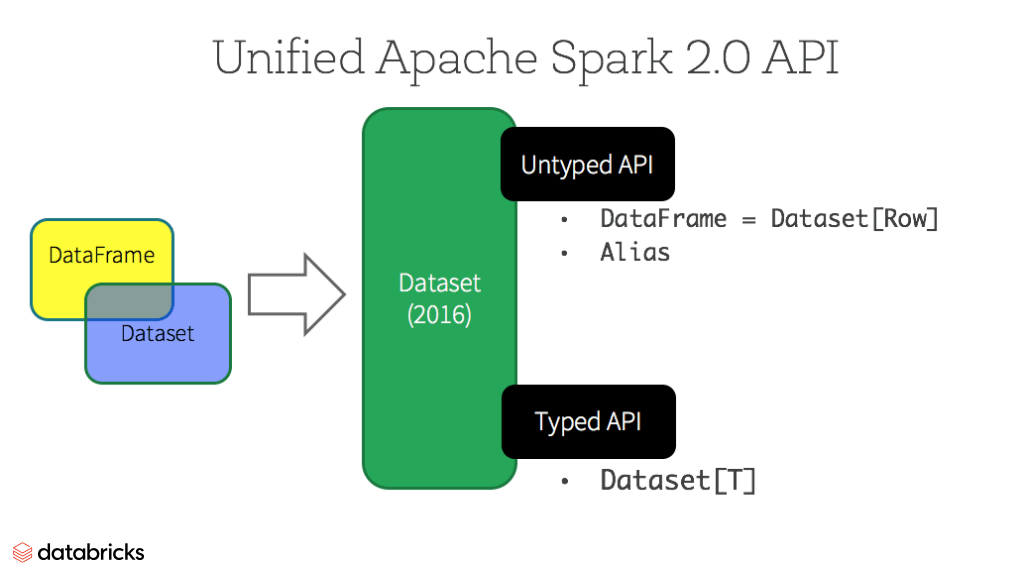

Dataframes and Datasets

- Also an immutable distributed collection of data

- Built on top of RDDs

- Structured data, organized in named columns

- Impose a structure, gives higher-level abstraction

- SQL like operations

- Dataset: strongly typed objects

- Catalyst optimizer, better performances

Transformations and Actions

Transformations

- Create a new (immutable) dataset from an existing one

- Instruct Spark how you would like to modify the Data

- Narrow Dependencies: 1 to 1 input to output

- Wide Dependencies (shuffles, so MapReduce): 1 to N

- transformations in Spark are lazy

- No computations

- Just remember the transformations from input dataset

Actions

- Return a value to the driver program

- After running a computation on the dataset

Transformations and Actions examples

Transformations

| Transformations |

|---|

| map* |

| filter |

| groupByKey |

Actions

| Actions |

|---|

| reduce |

| collect |

| count |

| first |

| take |

| saveAs… |

Some code

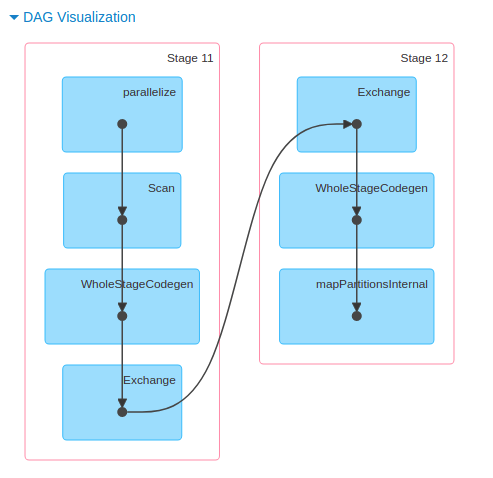

Execution Plan and DAGS

Streaming

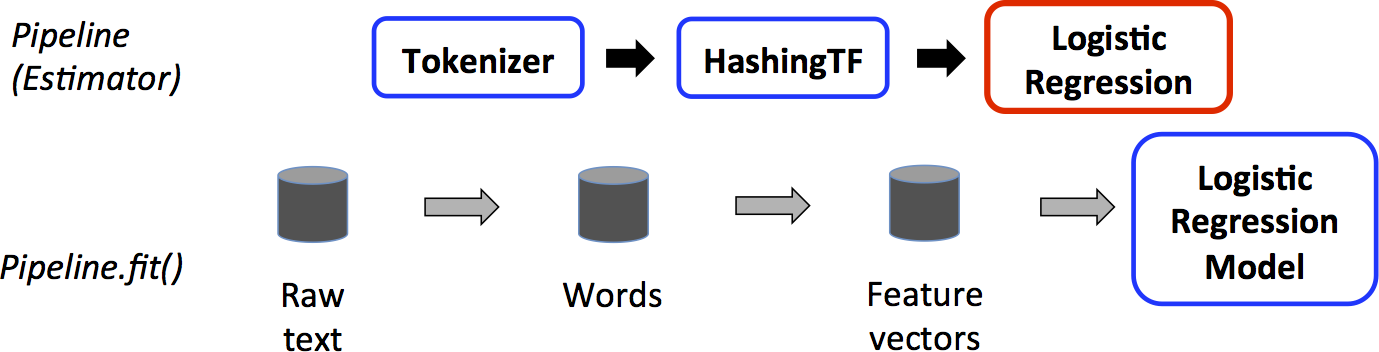

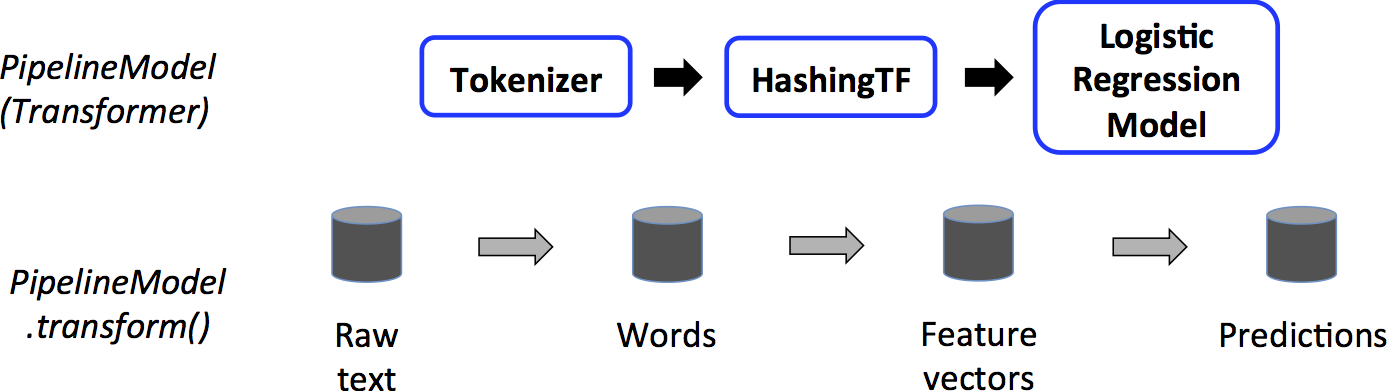

MLLib

- Uses Dataframe API as inputs

- Pipeline made of

- Transformers (analogy to transformation)

- Estimator (can be actions)

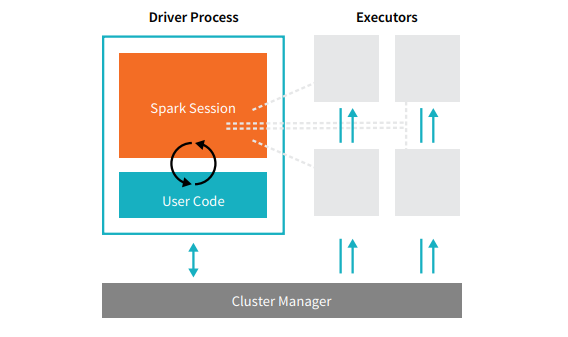

Spark Application and execution

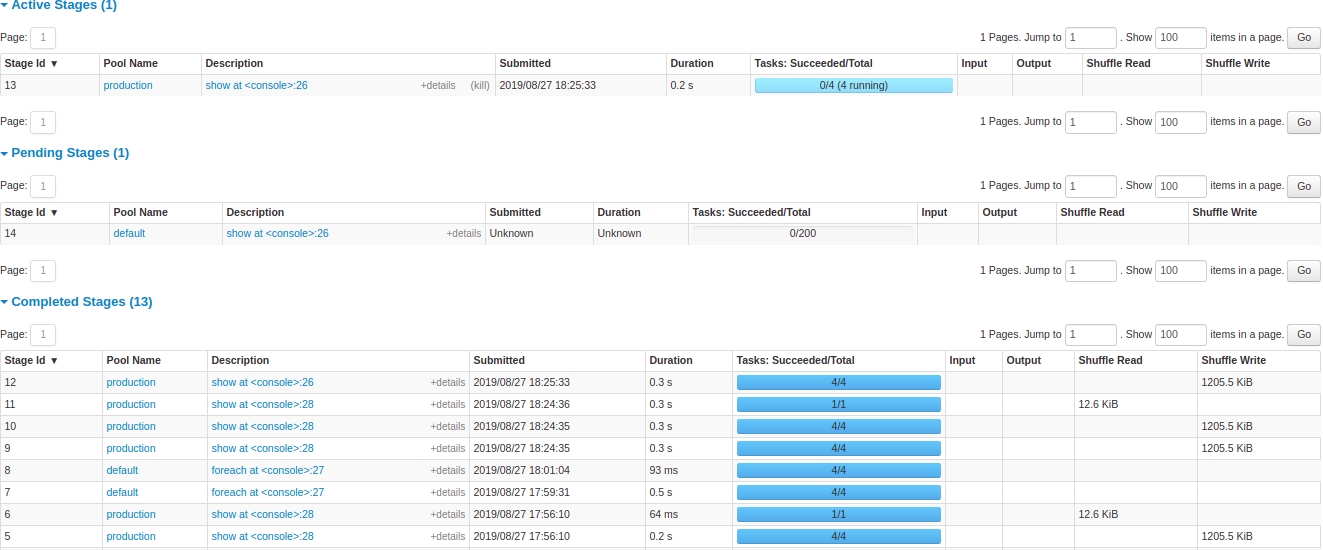

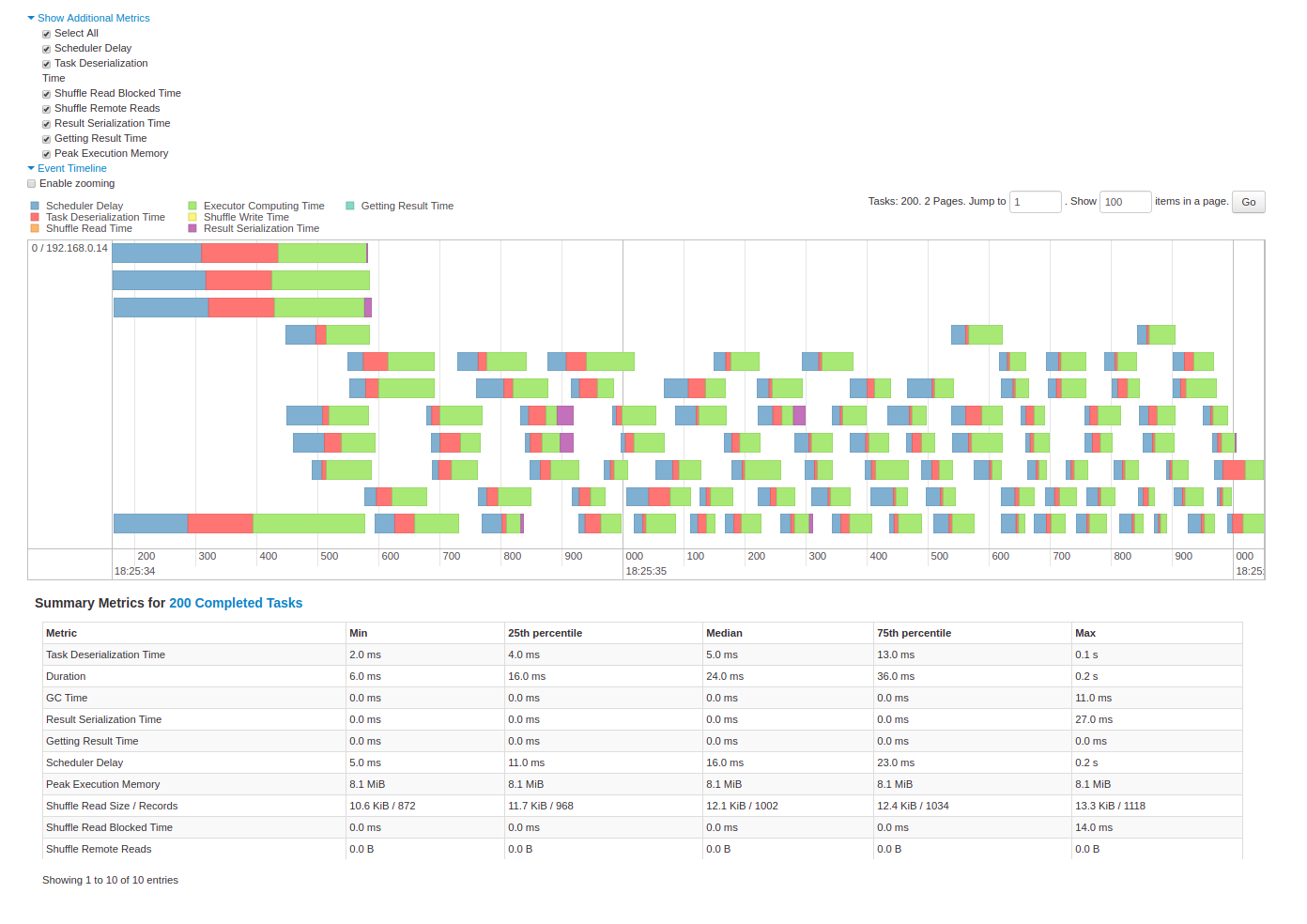

Dashboard

Dashboard 2

Quizz

What’s the main API of Spark?

- Answer A: MLLib

- Answer B: RDDs (Resilitent Distributed Datasets)

- Answer C: Datasets

- Answer D: Transformations

Answer link Key: op

Play with Map Reduce through Spark

Context

- Interactive notebook (developed some years ago…)

- Pre-configured

- Warm up on Py computation

- RDDs

- Dataframes

Notebook

Easiest: